I have a Web Hosting plans at OVH. I'm not that happy with the Website visit statistics tools. Urchin is deprecated, OVHcloud Web Statistics is still young and Awstats which I found great but it only show daily stats.

This is the reason why I decided to find a way to download logs and to treat them manually.

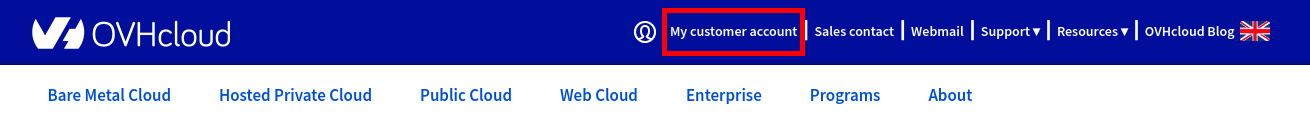

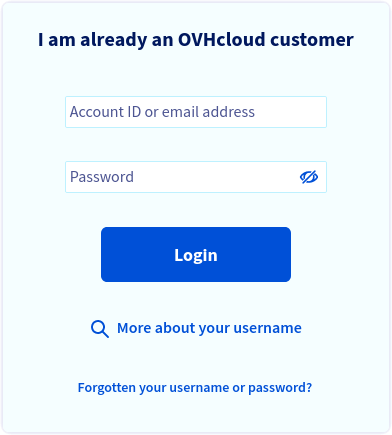

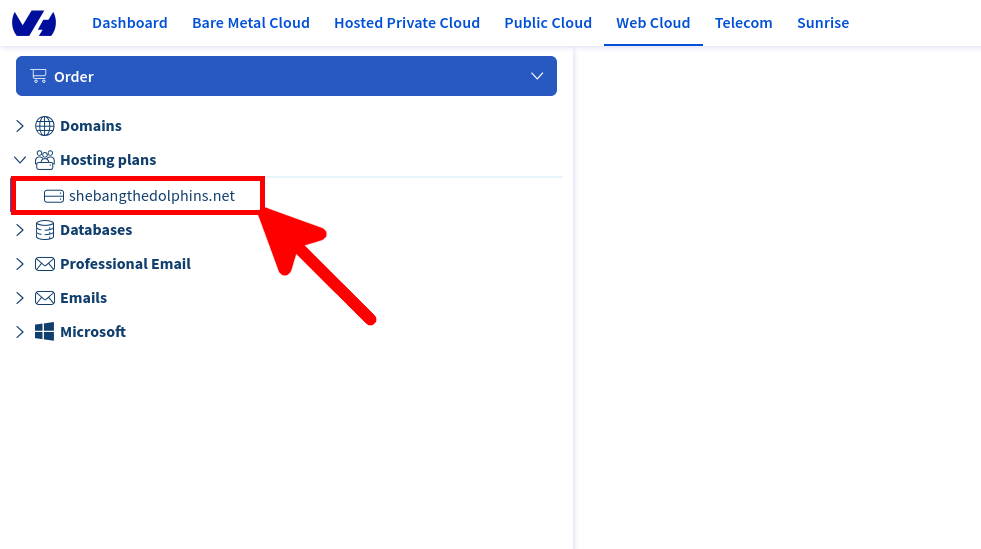

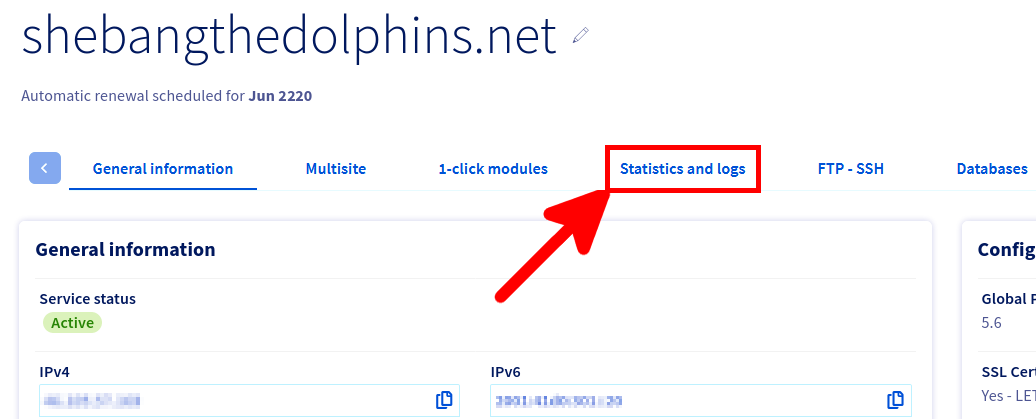

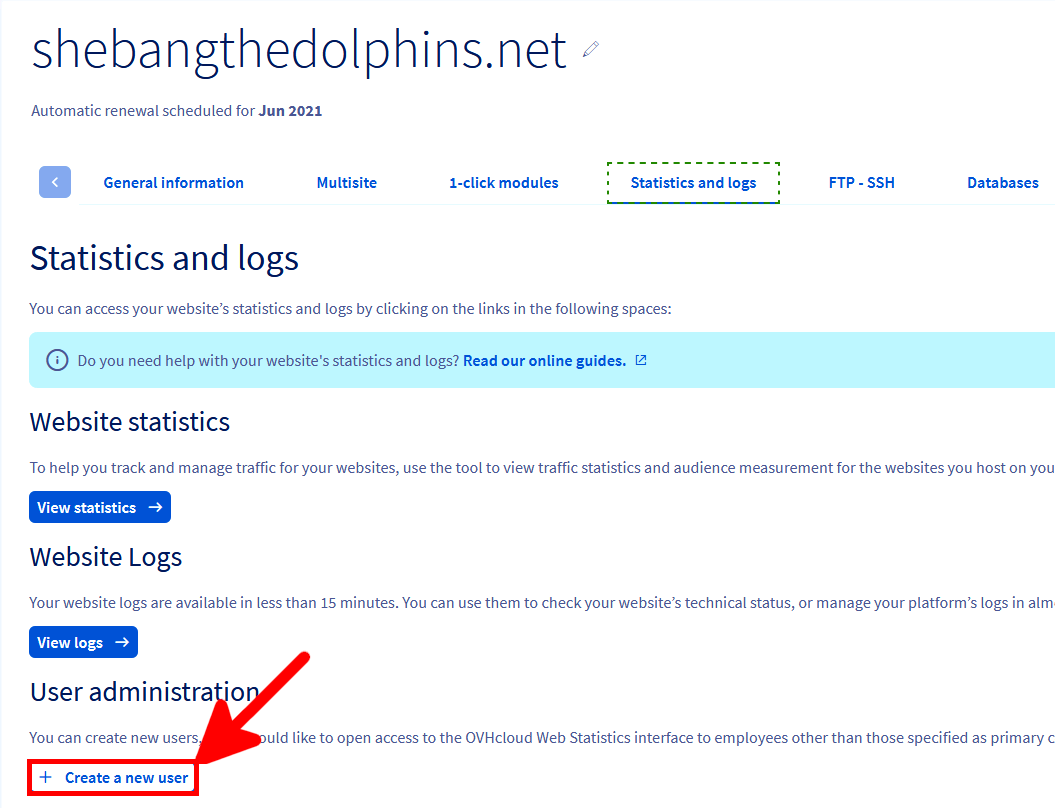

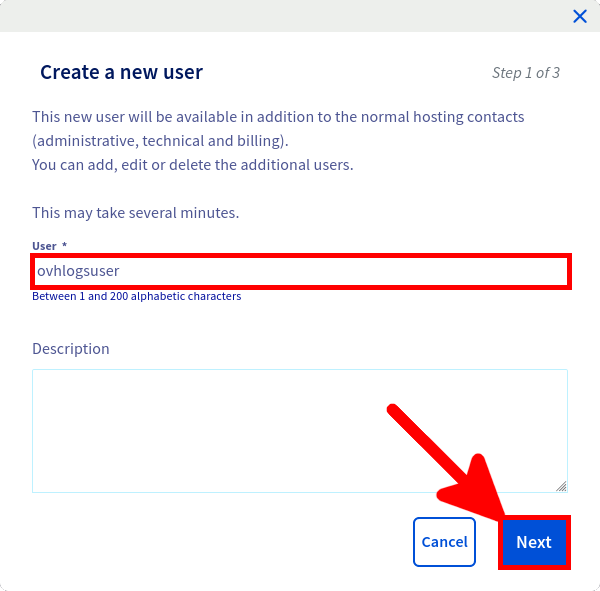

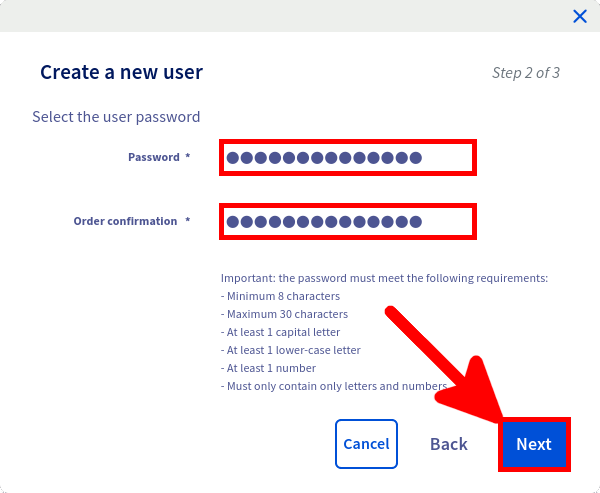

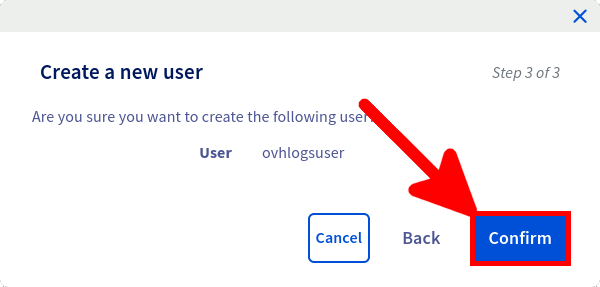

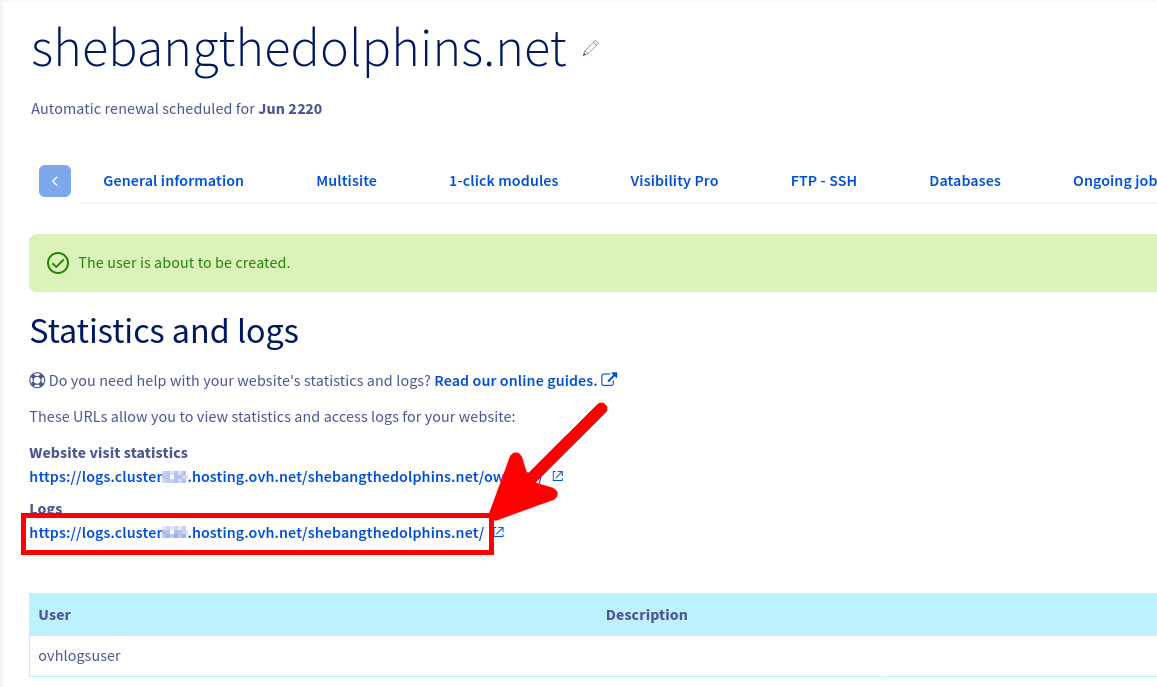

First thing to do is to create a dedicated log user from the OVHCloud Web Control Panel.

We now have everything we need to download our logs.

[user@host ~]$ USR=ovhlogsuser

[user@host ~]$ PASS=Myverycomplexpassw0rD

[user@host ~]$ URL=https://log.clusterXXX.hosting.ovh.net/shebangthedolphins.net/

[user@host ~]$ DOMAIN=$(awk -F '/' '{ print $4 }' <<< $URL)

[user@host ~]$ DOMAIN=shebangthedolphins.net

[user@host ~]$ wget --http-user="$USR" --http-password="$PASS" -A *gz -r -nd ""$URL"/logs/logs-10-2020/"

[user@host ~]$ ls -lh

total 596K

-rw------- 1 std std 55 10 déc. 2019 robots.txt.tmp

-rw-r--r-- 1 std std 24K 2 oct. 02:56 shebangthedolphins.net-01-10-2020.log.gz

-rw-r--r-- 1 std std 17K 3 oct. 02:19 shebangthedolphins.net-02-10-2020.log.gz

[…]

-rw-r--r-- 1 std std 14K 31 oct. 06:08 shebangthedolphins.net-30-10-2020.log.gz

-rw-r--r-- 1 std std 52K 1 nov. 06:08 shebangthedolphins.net-31-10-2020.log.gz

[user@host ~]$ wget --http-user="$USR" --http-password="$PASS" "$URL"/logs/logs-$(/bin/date --date='1 days ago' '+%m-%Y')/"$DOMAIN"-$(/bin/date --date='1 days ago' '+%d-%m-%Y').log.gz

[user@host ~]$ ls -lh

total 20K

-rw-r--r-- 1 std std 18K 25 nov. 06:29 shebangthedolphins.net-24-11-2020.log.gz

[user@host ~]$ perl-rename -v 's/(.*)-(\d\d)-(\d\d)-(\d\d\d\d)(.*)/$4-$3-$2-$1$5/' *gz

[user@host ~]$ ls -lh

total 596K

-rw-r--r-- 1 std std 24K 2 oct. 02:56 2020-10-01-shebangthedolphins.net.log.gz

-rw-r--r-- 1 std std 17K 3 oct. 02:19 2020-10-02-shebangthedolphins.net.log.gz

-rw-r--r-- 1 std std 14K 4 oct. 02:32 2020-10-03-shebangthedolphins.net.log.gz

[user@host ~]$ wget --http-user="$USR" --http-password="$PASS" "$URL"/logs/logs-$(/bin/date --date='1 days ago' '+%m-%Y')/"$DOMAIN"-$(/bin/date --date='1 days ago' '+%d-%m-%Y').log.gz -O /tmp/$(/bin/date --date='1 days ago' '+%Y-%m-%d')-"$DOMAIN".log.gz

[user@host ~]$ ls -lh /tmp/*gz

-rw-r--r-- 1 std std 18K 25 nov. 06:29 /tmp/2020-11-24-shebangthedolphins.net.log.gz

[user@host ~]$ for DAY in $(seq 1 30); do wget --http-user="$USR" --http-password="$PASS" "$URL"/logs/logs-$(/bin/date --date=''$DAY' days ago' '+%m-%Y')/"$DOMAIN"-$(/bin/date --date=''$DAY' days ago' '+%d-%m-%Y').log.gz -O /tmp/$(/bin/date --date=''$DAY' days ago' '+%Y-%m-%d')-"$DOMAIN".log.gz; done

[user@host ~]$ ls -lh /tmp/*gz

-rw-r--r-- 1 std std 17K 26 oct. 06:12 /tmp/2020-10-25-shebangthedolphins.net.log.gz

-rw-r--r-- 1 std std 14K 27 oct. 06:44 /tmp/2020-10-26-shebangthedolphins.net.log.gz

[...]

-rw-r--r-- 1 std std 18K 24 nov. 06:38 /tmp/2020-11-23-shebangthedolphins.net.log.gz

-rw-r--r-- 1 std std 18K 25 nov. 06:29 /tmp/2020-11-24-shebangthedolphins.net.log.gz

PS C:\Users\std> $user = "ovhlogsuser"

PS C:\Users\std> $pass = "Myverycomplexpassw0rD"

PS C:\Users\std> $secpasswd = ConvertTo-SecureString $pass -AsPlainText -Force

PS C:\Users\std> $credential = New-Object System.Management.Automation.PSCredential($user, $secpasswd)

PS C:\Users\std> $domain = "shebangthedolphins.net"

PS C:\Users\std> $url = "https://log.clusterXXX.hosting.ovh.net/$domain/"

PS C:\Users\std> Invoke-WebRequest -Credential $credential -Uri ("$url" + "logs/logs-" + $((Get-Date).AddDays(-1).ToString("MM-yyyy")) + "/$domain" + "-" + $((Get-Date).AddDays(-1).ToString("dd-MM-yyyy")) + ".log.gz") -OutFile "$((Get-Date).AddDays(-1).ToString("yyyy-MM-dd"))-$domain.log"

PS C:\Users\std> dir

Directory: C:\Users\std

ode LastWriteTime Length Name

---- ------------- ------ ----

-a---- 05/12/2020 15:45 360238 2020-12-04-shebangthedolphins.net.log

PS C:\Users\std> 1..30 | ForEach-Object { Invoke-WebRequest -Credential $credential -Uri ("$url" + "logs/logs-" + $((Get-Date).AddDays(-"$_").ToString("MM-yyyy")) + "/$domain" + "-" + $((Get-Date).AddDays(-"$_").ToString("dd-MM-yyyy")) + ".log.gz") -OutFile "$((Get-Date).AddDays(-"$_").ToString("yyyy-MM-dd"))-$domain.log" }

Now that we've downloaded our logs files, we can use command line to extract useful informations.

[user@host ~]$ DOMAIN=shebangthedolphins.net

[user@host ~]$ zgrep -viE 'Bytespider|Trident|bot|404|GET \/ HTTP|BingPreview|Seekport Crawler' 2020-11-24-shebangthedolphins.net.log.gz | grep html | awk '{ print $1" "$11 }' | sort | uniq | awk '{ print $2 }' | sort | uniq -c | sort -n | tr -s "[ ]" | sed 's/^ //' | grep "$DOMAIN"

1 "http://shebangthedolphins.net/backup_burp.html"

1 "http://shebangthedolphins.net/backup_burp.html"

1 "http://shebangthedolphins.net/prog_autoit_backup.html"

1 "http://shebangthedolphins.net/prog_powershell_kesc.html"

1 "http://shebangthedolphins.net/vpn_ipsec_06linux-to-linux_tunnel-x509.html"

1 "https://shebangthedolphins.net/fr/windows_grouppolicy_execute_powershell_script.html"

1 "https://shebangthedolphins.net/gnulinux_courier.html"

1 "https://shebangthedolphins.net/gnulinux_vnc_remotedesktop.html"

1 "https://shebangthedolphins.net/vpn_openvpn_windows_server.html"

1 "https://shebangthedolphins.net/windows_icacls.html"

1 "http://www.shebangthedolphins.net/vpn_ipsec_03linux-to-windows_transport-psk.html"

2 "https://shebangthedolphins.net/windows_mssql_alwayson.html"

3 "https://shebangthedolphins.net/fr/vpn_openvpn_buster.html"

7 "https://shebangthedolphins.net/ubiquiti_ssh_commands.html"

[user@host ~]$ DOMAIN=shebangthedolphins.net

[user@host ~]$ for i in *.log.gz; do echo "------------"; echo "$i"; zgrep -viE 'Bytespider|Trident|bot|404|GET \/ HTTP|BingPreview|Seekport Crawler' "$i" | grep html | awk '{ print $1" "$11 }' | grep "$DOMAIN" | sort | uniq | awk '{ print $2 }' | wc -l; done

------------

2020-11-19-shebangthedolphins.net.log.gz

19

------------

2020-11-20-shebangthedolphins.net.log.gz

24

------------

2020-11-21-shebangthedolphins.net.log.gz

8

------------

2020-11-22-shebangthedolphins.net.log.gz

16

------------

2020-11-23-shebangthedolphins.net.log.gz

15

------------

2020-11-24-shebangthedolphins.net.log.gz

13

[user@host ~]$ DOMAIN=shebangthedolphins.net

[user@host ~]$ YEAR=2020

[user@host ~]$ for i in $(seq -w 1 12); do echo "------------"; echo "$YEAR-$i"; zgrep -viE 'Bytespider|Trident|bot|404|GET \/ HTTP|BingPreview|Seekport Crawler' $YEAR-"$i"*.log.gz | grep html | awk '{ print $1" "$11 }' | grep "$DOMAIN" | sort | uniq | awk '{ print $2 }' | wc -l; done 2>/dev/null

------------

2020-01

101

------------

2020-02

73

------------

2020-03

92

------------

2020-04

91

------------

2020-05

87

------------

2020-06

73

------------

2020-07

81

------------

2020-08

97

------------

2020-09

135

[user@host ~]$ zgrep "html HTTP.*200.*[0-9]\{4\} \"\(https://www.google\|https://www.bing\|https://www.qwant\|https://duckduckgo\)" 2021-08-20-shebangthedolphins.net.log.gz | grep html | awk '{ print $1" "$7 }' | sort -n | uniq | awk '{ print $2 }' | sort | uniq -c | sort -n

[…]

8 /windows_grouppolicy_manage_searchbox.html

9 /windows_icacls.html

10 /vpn_openvpn_bullseye.html

11 /gnulinux_vnc_remotedesktop.html

11 /windows_rds_mfa.html

12 /fr/vpn_openvpn_windows_server.html

14 /windows_grouppolicy_shutdown.html

15 /gnulinux_nftables_examples.html

25 /vpn_openvpn_windows_server.html

72 /ubiquiti_ssh_commands.html

[user@host ~]$ zcat *.log.gz | grep "html HTTP.*200.*[0-9]\{4\} \"\(https://www.google\|https://www.bing\|https://www.qwant\|https://duckduckgo\)" | grep html | awk '{ print $1" "$7 }' | sort -n | uniq | awk '{ print $2 }' | sort | uniq -c | sort -n

[…]

11 /gnulinux_vnc_remotedesktop.html

11 /openbsd_packetfilter.html

12 /fr/gnulinux_nftables_examples.html

15 /windows_imule.html

20 /backup_burp.html

36 /fr/vpn_openvpn_buster.html

38 /vpn_openvpn_windows_server.html

112 /ubiquiti_ssh_commands.html

[user@host ~]$ YEAR=2021

[user@host ~]$ for i in $(seq -w 1 12); do echo "------------"; echo "$YEAR-$i"; zcat $YEAR-"$i"*.log.gz | grep "html HTTP.*200.*[0-9]\{4\} \"\(https://www.google\|https://www.bing\|https://www.qwant\|https://duckduckgo\)" | grep html | awk '{ print $1" "$7 }' | sort -n | uniq | wc -l; done 2>/dev/null

------------

2021-01

1311

------------

2021-02

1650

------------

2021-03

2566

------------

2021-04

3511

------------

2021-05

5452

------------

2021-06

6922

------------

2021-07

6437

------------

2021-08

4788

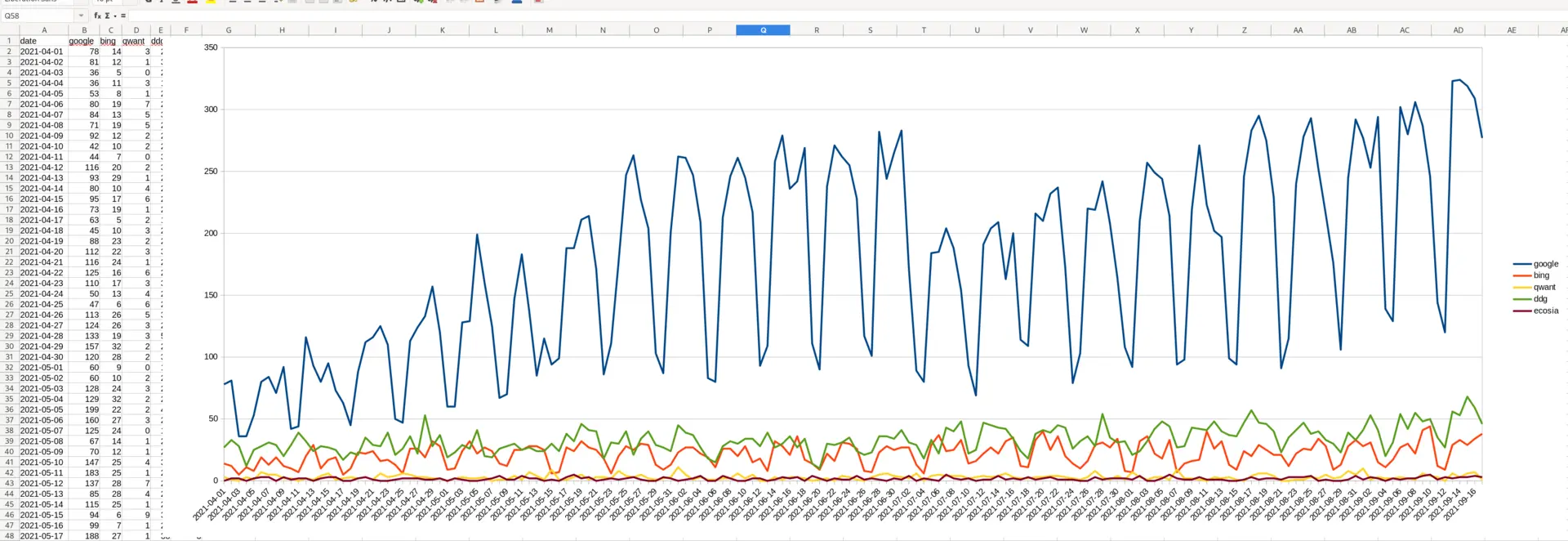

Scripts that I use to quickly see evolution of the search results.

#! /bin/sh

for LOGS in *.log.gz; do

echo "-----------------------------"

echo "LOGS : $LOGS"

for i in www.google www.bing www.qwant duckduckgo; do

RESULT=$(zgrep "html HTTP.*200.*[0-9]\{4\} \"https://"$i"" $LOGS | wc -l)

echo "$i = $RESULT"

done

done

-----------------------------

LOGS : 2020-11-21-shebangthedolphins.net.log.gz

www.google = 6

www.bing = 1

www.qwant = 0

duckduckgo = 4

-----------------------------

LOGS : 2020-11-22-shebangthedolphins.net.log.gz

www.google = 10

www.bing = 2

www.qwant = 0

duckduckgo = 6

-----------------------------

LOGS : 2020-11-23-shebangthedolphins.net.log.gz

www.google = 7

www.bing = 6

www.qwant = 2

duckduckgo = 1

#! /bin/sh

for LOGS in "$1"*.log.gz; do

TOTAL=0

echo "-----------------------------"

echo "LOGS : $LOGS"

for i in www.google www.bing www.qwant duckduckgo www.ecosia.org; do

RESULT=$(zgrep "html HTTP.*200.*[0-9]\{4\} \"https://"$i"" $LOGS | wc -l)

echo "$i = $RESULT"

TOTAL=$(($TOTAL+$RESULT))

done

echo "TOTAL $(date -d $(awk -F'-' '{ print $1"-"$2"-"$3 }' <<< $LOG) '+%A') : $TOTAL"

done

[user@host ~]$ sh ./std_ovh.sh 2021-02-1

-----------------------------

LOGS : 2021-02-10-shebangthedolphins.net.log.gz

www.google = 31

www.bing = 15

www.qwant = 1

duckduckgo = 23

www.ecosia.org = 0

TOTAL wednesday : 70

-----------------------------

LOGS : 2021-02-11-shebangthedolphins.net.log.gz

www.google = 38

www.bing = 11

www.qwant = 2

duckduckgo = 24

www.ecosia.org = 0

TOTAL thursday : 75

-----------------------------

#! /bin/sh

# Role : Extract ovh logs stats

# Author : http://shebangthedolphins.net/

pages=false

csv=false

usage()

{

echo "usage: ./std_ovh.sh YYYY-MM-DD"

echo "[-c] : export total stats to /tmp/stats.csv file"

echo "[-p <url>|<XX most viewed pages>] : export specific <url> or XX most viewed urls to /tmp/stats.csv file"

echo "ex : ./std_ovh.sh 2021-03"

echo "ex : ./std_ovh.sh 2021-03 -c"

echo "ex : ./std_ovh.sh 2021-03 -p vpn_openvpn_windows_server.html"

echo "ex : ./std_ovh.sh 2021-03 -p 10"

exit 3

}

case "$1" in

*)

LOGS=$1

shift # Remove the first argument (wich will be for example 2021-09-)

while getopts "p:ch" OPTNAME; do

case "$OPTNAME" in

p)

ARGP=${OPTARG}

pages=true

;;

c)

csv=true

;;

h)

usage

;;

*)

usage

;;

esac

done

esac

# show help if no arguments or -c AND -p are set

if [[ ( -z "$LOGS" ) || ( $pages == "true" && $csv == "true" ) ]] ; then

usage

fi

# create /tmp/stats.csv header

if $csv; then echo "date,google,bing,qwant,ddg,ecosia" > /tmp/stats.csv; fi

if $pages; then

if [[ "$ARGP" =~ ^[0-9]+$ ]]; then

HEAD=$ARGP

HTML="html"

else

HTML=$ARGP

HEAD=1

fi

for i in $(zgrep "$HTML HTTP.*200.*[0-9]\{4\} \"https://\(www.google\|www.bing\|www.qwant\|duckduckgo\|www.ecosia.org\)" $LOGS*.log.gz | sed 's/.*GET \(.*\) HTTP.*/\1/' | sort | uniq -c | sort -rn | head -n $HEAD | awk '{ print $2 }');

do

csv_header=$csv_header,$i

done

csv_header="date"$csv_header

echo "$csv_header" > /tmp/stats.csv

TMPFILE=mktemp #create mktemp file and put path inside TMPFILE variable. This file is used to improve perf (we store logs needed only in it).

fi

for LOGS in $(ls -1 $LOGS*.log.gz); do

if $pages

then

csv_data=$(date -d $(awk -F'-' '{ print $1"-"$2"-"$3 }' <<< $LOGS) '+%Y.%m.%d')

zgrep "html HTTP.*200.*[0-9]\{4\} \"https://\(www.google\|www.bing\|www.qwant\|duckduckgo\|www.ecosia.org\)" $LOGS > $TMPFILE #put interesting results inside TMPFILE

for i in $(sed 's/,/\n/g' /tmp/stats.csv | grep "html")

do

csv_data=$csv_data","$(zgrep "$i HTTP.*200.*[0-9]\{4\} \"https://\(www.google\|www.bing\|www.qwant\|duckduckgo\|www.ecosia.org\)" $TMPFILE | wc -l)

done

echo "$csv_data" >> /tmp/stats.csv

else

TOTAL=0

echo "-----------------------------"

echo "LOGS : $LOGS"

CSV=$(awk -F"-" '{ print $1"-"$2"-"$3 }' <<< $LOGS)

for i in www.google www.bing www.qwant duckduckgo www.ecosia.org; do

RESULT=$(zgrep "html HTTP.*200.*[0-9]\{4\} \"https://"$i"" $LOGS | wc -l)

echo "$i = $RESULT"

TOTAL=$(($TOTAL+$RESULT))

if $csv; then

CSV=$CSV,"$RESULT"

fi

done

echo "TOTAL $(date -d $(awk -F'-' '{ print $1"-"$2"-"$3 }' <<< $LOGS) '+%A %d %b %Y') : $TOTAL"

if $csv; then echo "$CSV" >> /tmp/stats.csv; fi

fi

done

if $pages; then rm $TMPFILE; fi #remove TMPFILE

[user@host ~]$ sh ./std_ovh.sh 2021- -c

[user@host ~]$ tail /tmp/stats.csv

date,google,bing,qwant,ddg,ecosia

2021-03-30,82,10,1,38,0

2021-03-31,87,26,5,32,0

[…]

2021-04-07,70,17,5,20,1

2021-04-08,71,19,5,29,0

[user@host ~]$ sh ./std_ovh.sh 2021-09 -p 3

[user@host ~]$ tail /tmp/stats.csv

date,/ubiquiti_ssh_commands.html,/vpn_openvpn_bullseye.html,/vpn_openvpn_windows_server.html

2021.09.01,89,28,39

2021.09.02,109,19,41

[…]

2021.09.06,82,33,44

2021.09.07,94,37,29

[user@host ~]$ sh ./std_ovh.sh 2021-09 -p vpn_openvpn_bullseye.html

[user@host ~]$ tail /tmp/stats.csv

date,/vpn_openvpn_bullseye.html

2021.09.01,28

2021.09.02,19

[…]

2021.09.06,33

2021.09.07,37

PS C:\ > $domain = "shebangthedolphins.net"

PS C:\ > Select-String .\2020-11-05-shebangthedolphins.net.log -NotMatch -Pattern "Bytespider","Trident","bot","404","GET / HTTP","BingPreview","Seekport Crawler" | Select-String -Pattern "html" | %{"{0} {1}" -f $_.Line.ToString().Split(' ')[0],$_.Line.ToString().Split(' ')[10]} | Select-String -Pattern "$domain.*html" | Sort-Object | Get-Unique | %{"{0}" -f $_.Line.ToString().Split(' ')[1]} | group -NoElement | Sort-Object Count | %{"{0} {1}" -f $_.Count, $_.Name }

1 "https://shebangthedolphins.net/fr/prog_introduction.html"

1 "https://shebangthedolphins.net/fr/prog_sh_check_snmp_synology.html"

1 "http://shebangthedolphins.net/openbsd_network_interfaces.html"

1 "https://shebangthedolphins.net/fr/menu.html"

1 "https://shebangthedolphins.net/fr/windows_commandes.html"

1 "https://shebangthedolphins.net/fr/windows_run_powershell_taskschd.html"

1 "http://shebangthedolphins.net/prog_sh_check_snmp_synology.html"

1 "https://shebangthedolphins.net/fr/windows_grouppolicy_reset.html"

1 "https://shebangthedolphins.net/fr/windows_grouppolicy_update_policy.html"

1 "https://shebangthedolphins.net/virtualization_kvm_windows10.html"

1 "https://shebangthedolphins.net/windows_event_on_usb.html"

1 "https://shebangthedolphins.net/prog_powershell_movenetfiles.html"

1 "http://shebangthedolphins.net/prog_autoit_backup.html"

1 "https://shebangthedolphins.net/fr/vpn_openvpn_buster.html"

1 "http://shebangthedolphins.net/prog_powershell_kesc.html"

1 "https://shebangthedolphins.net/index.html"

1 "http://shebangthedolphins.net/gnulinux_courier.html"

4 "https://shebangthedolphins.net/ubiquiti_ssh_commands.html"

6 "https://shebangthedolphins.net/vpn_openvpn_windows_server.html"

Contact :